I'm Brian, a geek, a tinkerer, and sporadic content creator.

I write blogs and create videos about my hobbies: DIY NAS servers, 3D-printing, home automation, and anything else that captures my attention.

Latest Posts

A two-for-one DIY NAS build blog! I detail the components that I would pick for 2024's DIY NAS and EconoNAS builds. Which one will be your favorite?

An inexpensive AI server using a Nvidia Tesla M40 GPU, Proxmox VE, Forge, Oobabooga, remote access via Tailscale, and some leftover spare-parts.

I outgrew the case for my DIY NAS, the MK735, and to replace it, I had to buy three products: a Silverstone Technology CS382, an Icy Dock Express Cage MB038SP, and an Icy Dock ToughArmor MB411SPO-2B. I’ll share my review of all three products and talk about the outcome of this upgrade.

I reached out to a creator of 3D-printable computer cases, makerunit, to ask if he was interested in designing a 3D-printed NAS case. And before I knew it, he designed two fantastic DIY NAS cases!

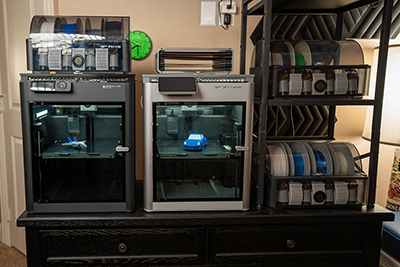

My two 3D printer arrangement didn't quite work out because the Prusa MK3 couldn't keep up with my Bambu Labs X1C Combo. I solved this problem by buying another Bambu Labs 3D printer!

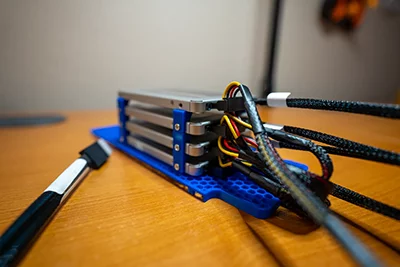

Increasing the capacity of my NAS by 3D-Designing and 3D-printing a drive caddy for 4x 2.5" SSDs. Is an all flash DIY NAS in my future?

My evaluation of an interesting barebones NAS featuring: two 3.5-inch hard drive bays, a M.2 NVMe 2280 slot, an Intel N100 CPU, one DDR4 SODIMM, and two 2.5Gbps NICs.

I've been using Octopress 2.0 for over a decade. The time it takes to generate my site and declining traffic made it painfully obvious a change was overdue.

A small form factor, 4-bay DIY NAS featuring a Celeron N5105 CPU, 16GB of RAM, 128GB NVMe SSD, 2.5Gbps networking and TrueNAS SCALE for under $400.